Prediction Without Markets

In the 2008 Summer Olympics Usain Bolt ran 100 meters in 9.69 seconds, earning the gold medal and garnering the international attention that comes with being the “fastest man in the world.” While Bolt became a household name, his competitors didn’t fare nearly as well: far fewer people know that Richard Thompson and Walter Dix received silver and bronze, and I suspect that 8th place Darvis Patton is practically unknown outside the sprinting world. The 340 milliseconds that separated Bolt from Patton—the duration of a blink of the eye—was the difference between celebrity and obscurity. While a fascination with rank is perhaps justifiable for sports, such focus—let’s call it the Gold Medal Syndrome—is often problematic in statistical analysis.

Consider the case of prediction markets. In these markets, participants buy and sell securities that realize a value based on the occurrence of some future outcome, such as the result of an election, the box office revenue of an upcoming film, or the market share of a new product. For example, the day before the 2008 U.S. presidential election you could have paid $0.92 for a contract in the Iowa Electronic Markets that yielded $1 when Barack Obama won, implying a 92% market-estimated probability that Obama would win. There are compelling theoretical reasons to expect prediction markets to outperform all other available forecasting methods. As formalized by the efficient-market hypothesis, if there were some way to beat the market then at least one savvy trader would presumably exploit that advantage to make money; hence, market prices should update to eliminate any performance disparity.

Inspired by such theoretical arguments, and also by a growing body of empirical findings that show markets beat alternatives, several researchers have called for widespread application of prediction markets to real-world business strategy and policy development problems. In a 2007 Wall Street Journal op-ed, economists Robert Hahn and Paul Tetlock write:

“Imagine the president had a crystal ball to predict more accurately the impact of broader prescription coverage on the Medicare budget, the effect of more frequent audits on tax compliance—or even the consequences of a political settlement in Iraq on oil prices. Now, stop imagining: Such crystal balls [prediction markets] are within our grasp.”

The work on which these appeals are based, however, primarily addresses the relative ranking of prediction methods. By contrast, the magnitude of the differences in question has received much less attention, and as such, it remains unclear whether the performance improvement associated with prediction markets is meaningful from a practical perspective.

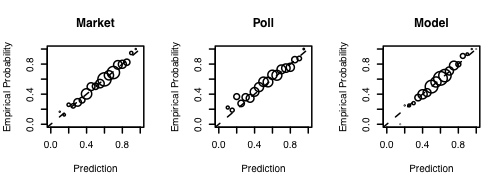

In a new study, Daniel Reeves, Duncan Watts, Dave Pennock and I compare the performance of prediction markets to conventional means of forecasting, namely polls and statistical models. Examining thousands of sporting and movie events, we find that the relative advantage of prediction markets is remarkably small. For example, the Las Vegas market for professional football is only 3% more accurate in predicting final game scores than a simple, three parameter statistical model, and the market is only 1% better than a poll of football enthusiasts. The plot below shows how the three methods perform on the complementary task of estimating the probability the home team wins.

Given that sports and entertainment markets are among the most mature and successful, our results challenge the view that prediction markets are substantively superior to alternative forecasting mechanisms. Nevertheless, it is certainly possible that there are forecasting applications where either the relative advantage of markets is larger, or that such differences in performance are consequential. Thus, while prediction markets may yet prove to be useful, it would seem the enthusiasm for their predictive prowess has outpaced the evidence.

NB: Check out our paper for more details.

Illustration by Kelly Savage