The Future is Yesterday

Independent teams from Yahoo and Google recently demonstrated that search volume for flu-related terms strongly correlates with CDC reported influenza levels, suggesting that search logs could be used to monitor public health. It is certainly tantalizing to think the same technology that shows Isaiah Thomas is “on fire” today (search-wise, that is), could also help detect the onset of a global pandemic. That possibility, in fact, prompted Steven Levitt to exclaim, “Google will save the world.” The problem is, you don’t really need a big, bad search engine to make flu predictions. Roughly speaking, as a predictor for flu, last week’s flu levels outperform today’s search queries.

So what’s going on? Well, first, neither study is actually predicting (or claiming to predict) future flu levels. Rather, they are predicting what will be reported in future CDC announcements. Each week the CDC collects epidemiological statistics from health care providers, and collecting, processing, analyzing and posting these data usually take about 1-2 weeks. So the actual flu level for the week of March 15, for example, may not be widely reported until the end of the month. What the flu papers show, then, is that search queries lead CDC reports by about 1-2 weeks, but still lag actual flu cases by about a day.

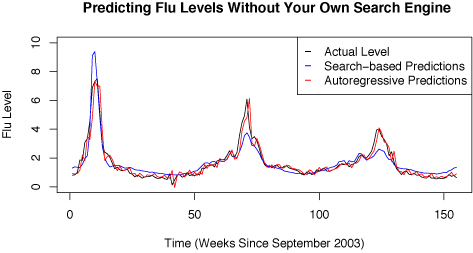

In predicting CDC reports, using search queries appears to do quite well–so well that no one seems to have asked how the search engine approach performs relative to other, more conventional methods. It turns out, perhaps surprisingly, that predicting flu levels is not as hard as it might seem: This week’s and last week’s flu levels together yield a better prediction of next week’s flu level than do next week’s search queries. Ok, this is a little confusing, but hopefully it’ll become clear with an example. Suppose I want to know the flu levels for the week of March 15-21. The CDC won’t report statistics for that week until late March or early April. On the other hand, by looking at query logs, on March 22nd I would have a pretty good sense of flu activity for that week. But, on March 22nd (or perhaps a few days later), the CDC will have already released statistics for the two weeks of March 1-7 and March 8-14. And data from these two weeks are a comparable (in fact, a bit better) predictor of flu levels during the week of March 15-21 than are search queries from that same week. The plot below shows how remarkably well the simple autoregressive model (i.e., the model based on the latest available CDC reports) predicts the real, retrospectively reported CDC data.

Predictions from an autoregressive model outperform predictions based on search volume. Flu levels are for the South Atlantic Region, one of the nine CDC designated geographical regions.

To be clear, search data are still potentially useful for disease surveillance, particularly in countries where public health infrastructure lags behind Internet access. And a predictive model based on search and historical data does (slightly) outperform a model based on historical data alone. But in light of how well one does by simply looking to the past, predictions based on querying behavior are somewhat less impressive.