TagTime: Stochastic Time Tracking for Space Cadets

This article is co-authored with Bethany Soule.

If you want to (bee)mind how you spend your time (and oh do we ever!) then you need a time tracker.

But there’s a big problem with time tracking tools. You either have to (a) rely on your memory, (b) obsessively clock in and out as you change tasks, or (c) use something like RescueTime that auto-infers what you’re doing.

Well (a) is a non-starter, and (b) is hard if someone comes by and distracts you, for example. It can even be impossible. If you space out and start daydreaming about sugarplumbs [1], you can’t capture that. By definition, if you could think to clock out then it wasn’t spacing out. (And it might be a real problem you’re working on, rather than sugarplumbs [1], but either way.) Maybe we have attention deficit disorder or something but manual time-tracking never worked well for us. That leaves (c), software that monitors what applications or web pages you have open. That really seems to miss the boat for us. You might be hashing out an API design over email or working out an algorithm on paper. Or what if you’re testing your Facebook app, not screwing around on Facebook? [2] There’s no automated test to distinguish wasted time from productive time!

The idea is to randomly sample yourself.

We wanted something completely passive that doesn’t try to automatically infer what you’re doing. You might think “completely passive without automatic inference” is an oxymoron. But we hit on a solution: randomness. The idea is to randomly sample yourself. At unpredictable [3] times, a box pops up and asks, what are you doing right at this moment? You answer with tags each time it pings you. At first this yields noisy estimates but they’re unbiased and over time plenty accurate. It’s just a matter of getting a big enough sample size — collecting enough pings, as we call them. It doesn’t work for fine-grained tracking but over the course of weeks, it gives you a good picture of where your time goes.

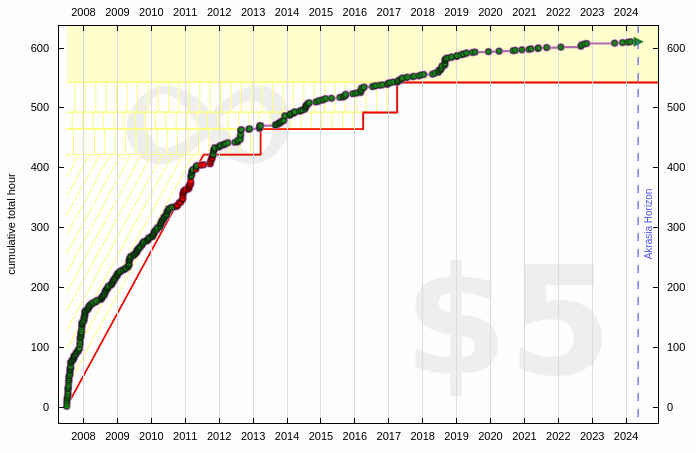

Here’s one of us (Dreeves) averaging two hours a week working on TagTime since it hit the dog food point, which was almost right away since it started as a very simple Perl script:

More examples of things we track automatically with TagTime — including Dreeves’s time spent working on Messy Matters — are here: beeminder.com/tagtime.

So you’ve arranged to have your computer periodically interrupt you while you’re working?

We certainly appreciate the value of not being interrupted. We’ve been using TagTime so long — going on four years — that we don’t recall how much of an adjustment period there was, but we find it doesn’t interrupt our flow at all. We think the difference is that it’s not like, say, a pop-up email notifier that pulls your train of thought off in a new direction. It’s almost the opposite: it reinforces what you’re already focused on. It can even serve to get you back on track if your mind is starting to wander. “Oh yeah, this email is entirely unrelated to the work I intended to be doing. Whoops!”

How much time do you waste on this?

Funny you should ask. TagTime knows. Dreeves spends on average 15 minutes a day (plus or minus 8 [4]) answering pings, and Bethany spends 6 minutes (plus or minus 3). It goes to show that the time you spend answering pings depends somewhat on how much thought you put into it. The taxonomy Bethany uses is simpler than Dreeves’s, plus she spends about 10 hours less at the computer per week (45 hours to Dreeves’s 56), but Dreeves also had a smaller sample period where he used a tag specifically for answering pings (hence the wider confidence interval). With zero effort you can learn the amount of time you spend at the computer versus not (TagTime autotags pings you don’t answer with “afk”).

You could also arbitrarily reduce the average amount of time spent on TagTime by reducing the rate parameter for the pinging Poisson process (i.e., how frequently it pings you), at the cost of increased variance in the time estimates. We have it set to ping every 45 minutes on average.

Other interesting tidbits about Dreeves in particular, who, as we noted, is more obsessive about his tag taxonomy: He sleeps 6.7 hours per night (when he wakes up he answers all pings he didn’t hear with the “slp” tag), spends 2 hours per week on Hacker News, and a bit over 20 hours per week with his kids.

So how can I be my own Big Brother?

We’ve open sourced the code on github, and if you know how to clone a git repository you can figure it all out just fine, which is to say it is not exactly user friendly. If you’re interested in hearing about development, and being notified if/when it is ready for consumption by normal people, you can drop us a line. There’s also a barebones implementation available on the Android Market. The source code for that is included in the TagTime git repository.

Bonus Puzzle

Suppose you frequently take the bus, arriving at the bus stop at a random time each day. The bus schedule states that, on average, buses arrive 15 minutes apart. But, paradoxically, you notice that you wait 30 minutes on average before catching a bus. How is that possible?

Follow-up question: Could something screwy like that happen with TagTime? Suppose you get paid every time you get pinged while working. (Recall that pings ping per a Poisson process.) What’s your optimal strategy for maximizing your effective hourly pay rate? (For example, if you were God you would only start working for a split second before each ping pings and then immediately stop, thus earning an unbounded amount of money per hour of work. But you’re not God.) If you’re paid d dollars for every ping that pings while you’re working and the Poisson process has rate parameter λ, how much money can you make per hour of work?

Further Reading and Resources

- Joel Spolsky has a brilliant article on Evidence-Based Scheduling which, in particular, proposes another solution to the problem of getting interrupted while tracking your time spent on a task.

- Alternatives to RescueTime: TimeAnalyzer and ProcrastiTracker are Windows-only and we haven’t tried them. ActiveTimer is Mac-only and we have tried it and it’s nice and simple and useful. WhatchaDoing is tantalizingly close to TagTime yet misses the boat. Finally, here’s another collection of alternatives to RescueTime.

- A related hack from psychology is the Experience Sampling Method in which you query people at random times about their emotional state.

Addendum

Congratulations to Andy Whitten for solving the puzzle. He gives a simple example where 4 buses arrive all at once, every hour on the hour. Interestingly, it’s also possible under the constraint that the bus interarrival times are i.i.d.: With high probability the next bus arrives within a split second and with low probability it arrives in an hour.

The follow-up question is related to the waiting time paradox, and the PASTA property as pointed out by Vibhanshu Abhishek in the comments. The upshot is that memorylessness can be counter-intuitive but it has a very handy property for TagTime: There’s no way to game it. If you’re paid d dollars per ping and pings ping every 1/λ hours then you’ll make exactly as much on average as if you were just paid an hourly rate of d/λ. (For example, if pings ping hourly and you’re paid $100/ping then your effective hourly rate will converge on $100/hour.) Exquisitely fair, in expectation!

Footnotes

[1] EDIT: That’s right, sugarplumbs. They’re a sweet carpentry device. Thanks to Winter Mason for noting the distinction from sugarplums, which we totally did not mean and would be far less interesting to daydream about. Winter thought he was earning the $20 typo bounty. Ha!

[2] We’ve noticed another problem with RescueTime and its ilk, at least on Macs that don’t have focus-follows-mouse but do have “scroll-follows-mouse”: You can be scrolling around on a web page without having changed focus to it.

[3] The key to making it fully unpredictable is to use a memoryless distribution. I.e., knowing exactly when you were sampled in the past tells you nothing about when you will be sampled in the future. TagTime achieves this by pinging you according to a Poisson process, which is to say that the amount of time between each ping is drawn from an exponential distribution.

[4] That’s a 95% confidence interval, which is precisely computable by knowing the number of pings and the amount of time over which those pings were gathered.

Illustration by Kelly Savage

Hi,

Nice read! Especially because you guys are solving a problem we have been working on for years too. I completely agree with your statements on automated tracking. We have found them cool and exciting to start with but never quite accurate enough to get the job done well. Often needing more work calibrating than just manual entry.

We have settled on a solution where we integrate with what you have already planned and shared before. A calendar which you can sync with Google calendar (and iCal) and write your tasks away as worked time. This quite often still requires changes but does offer a great reference. Furthermore we’ve integrated with twitter so your tweets can give you an idea on what you did when. And for the geeks under us, we are planning a git/svn integration so your commits will show up and can be written at worked time as well.

I’ll definitely dive into your git repo and have a look. Maybe there are some nice ways to integrate.

Cheers,

Joost Schouten

Yanomo – time tracking and invoicing

This is a really interesting idea. Thanks for posting about it. It’s cool that y’all have been doing it for so long.

On the bonus puzzle, if four buses arrive every hour on the hour then they are averaging 15 minute headways [(0+0+0+60)/4=15]. Given this, if your arrival is uniformly distributed across the hour then your average wait time would be 30 mins. Of course, both the bus schedule and your daily itinerary are absurd, but that is what makes hypotheticals so fun.

I’m curious as to the question of gaming the poisson process. My instinct is that it is impossible, that you can’t time your work-hours to maximize payoff since the ping is equally likely to come at any point. Am I missing something here?

@Joost, Yanomo looks nicely done. Thanks for pointing us to it! Definitely let us know if you incorporate this random sampling idea. I’m not sure if it’s a nerds-only kind of thing where you have to grok the math behind it to really appreciate the value of it. Otherwise it might be too tempting fudge it when you’re unlucky and get pinged doing something you were only doing for just a moment. I haven’t really thought about how badly that breaks it but the idea is to always answer with what it catches you doing right at that exact moment and then just have faith in math.

@Andy, nicely done! It’s even possible for this to happen if the inter-arrival times are i.i.d., with a minor variation to your solution: With high probability the next bus arrives in 1 second and with low probability it arrives in an hour.

Let me know a URL for you for the addendum to this post!

Really cool. Now I can track whether I am spending my dissertation time actually doing research or reading TC. I was going to point out the Poisson sampling and the PASTA property but saw your footnote :-)

On the bonus puzzle, if you consider the arrival of every bus a renewal process and X as the iid interarrival time (with distribution F(.)), and Y as the time you have to wait for the bus, the limiting pdf of Y is given by p(y) = (1-F(y))/E[X]. The limiting expected wait time is E[Y] = E[X^2]/2E[X].

In the case of exponential interarrival times is 2E[X]. So you need to wait twice as long. But don’t we all know that buses don’t arrive according to a memoryless process ;-) Oh, the small joys of modeling…

@Vibhanshu, I’m confused by your apparent claim that for exponential interarrival times your average wait time is twice the average interarrival time. I might be reading that wrong.

See the addendum I’m just now adding for my answer…

Are there any plans to create an iPhone app? I’m not guaranteed to be at a computer, but my phone is always with me.

@Donovan: We’ve got Android so far, but not iPhone. Email support@beeminder.com to be added to the announcement list for new versions of TagTime. Or use this form: http://beeminder.com/contact?code=TAGTIME

I really like the Android implementation because, as Donovan says, I always have my phone with me. Installed it and have been logging, but can’t figure out how to obtain a graph of “results”. Is there some way to get a graph out of the Android app, or do I need to import my logs into the TagTime desktop code? Thanks!

@L: Need to import, and even then there are just some command-line tools for getting stats about your tags and time spent. To get an actual graph the only option is to link to Beeminder.

Sorry that we *still* don’t have a friendlier implementation of TagTime itself! See my previous comment about getting notified when we have something to announce.

It’s not a problem to put the data into Beeminder, since I was thinking about using Beeminder anyway. But, after futzing around for a while, I’m still a little puzzled about what exactly I need to do. Is there a way to directly import the timepie/TagTime logs to Beeminder? Or do I need to get it into the desktop app, then export something else that goes into Beeminder? (I did futz around with trying to get log information into Beeminder, but wasn’t successful.) Either way, can you list the steps required?

I don’t mind the implementation being a little complex-just need a little help using it, apparently! I am hoping that maybe others might benefit from your response as well.

Thanks again!

playsound seems not to function on Lion: “the PowerPC architecture is no longer supported.”

[…] And we started tracking our time — which we had written a nifty time tracker for called TagTime, which this is also not a pitch for (in fact it’s not exactly user friendly — it […]

What is the correct method for calculating the confidence intervals for time spent on a tag?

During a period of 43 hours, there were 13 pings where I was sitting on the floor. Using the formula here http://en.wikipedia.org/wiki/Poisson_distribution#Confidence_interval I calculate that the 95% confidence interval is [6.922, 22.230] pings. So that gives an interval of [43/22.230, 43/6.922] = [1.934, 6.212] hours. Right? Seems too easy..

I believe this is the formula for a c-confidence interval (eg, c=.95) and a TagTime gap of g (eg, g=.75) for n pings:

$$ left{g Q^{-1}left(n,frac{c+1}{2}right),g Q^{-1}left(n,frac{1}{2}-frac{c}{2}right)right}$$

So for 13 pings I’m getting {5.19146, 15.7212}.

Thanks.

Interestingly 0.75*[6.922, 22.230] = [5.191, 16.673], and if I take out the “+2” in the right side of the formula I linked above, I get 0.75*[6.922, 20.962] = [5.191, 15.721]. The “+2” there seems to correspond to a “+1” on the right side of your formula: http://www.wolframalpha.com/input/?i=%7Bg+InverseGammaRegularized%5Bn%2C+%281+%2B+c%29/2%5D%2C+++g+InverseGammaRegularized%5Bn%2B1%2C+1/2+-+c/2%5D%7D+where+g%3D.75%2C+c%3D.95%2C+n%3D13

Interesting! I think I vaguely see what may be going on here. Your formula is for a Poisson mean which is the expected number of pings that happen, or something. The expected amount of time in which a number of pings happen is related to that but is about the lengths of the gaps between the pings, hence multiplying your numbers by the average gap length, and the off-by-one issue.

At one point I believe I double checked my formula with a little simulation so I’m fairly confident in it but if you have reason to doubt it, definitely let me know.

I think I share your intuition, and my simulations, while inconclusive so far, seem to support not using the +1.

However, without the +1, you get no upper bound when you observe 0 pings. This makes me wonder if the +1 should be there, but maybe the duration of the experiment should be adjusted to account for 1/2 of the gap bleeding off either edge of the range between the first ping and the last (e.g. if estimating hours/week from a week of observed pings, ignore any pings which fall within g/2 of the start or end of the week, or consider the experiment to last 1 week + g).

Thanks for this bug report! Realistically, since this is tangential to Beeminder, where all my energy is at the moment, I probably won’t fix this till I’m on Lion myself.

I’ll definitely accept pull requests though! http://tagti.me

Can’t wait ’til this is baked! I’m too nooby/lazy to figure out how to use it as it currently stands, but once it’s done I’ll use the hell out of it. I’ve downloaded the Android app, but since most of my work is done on the computer, I’m not sure if I’ll have the patience to be fumbling with my phone every X minutes. (Plus when I’m listening to stuff while working I can’t always hear my phone.) I’ll give it a try, though. It’s a brilliant idea, and the Beeminder integration pushes it over the top. Any chance of ever being able to superimpose two tag graphs (say, “slacking” versus “billable work”) for us freelancers with bad work/life balance?

I’m having trouble this this, too. I know this thread is ancient now, but is there a way to get my timepie.log info from android into beeminder? Does it have to go through the desktop app or what? I don’t mind if it is complex, I just don’t see any way of doing it at all.

The TagTime implementation is still pretty half-baked! If you’re a hacker and want to dive in with us on github, that would be awesome.

There’s also a tagtime google group — https://groups.google.com/group/tagtime — which would probably be a better place to pose (excellent) questions like this one.

If you just want to know when it’s more fully baked, get on our announcement list: http://beeminder.com/contact?code=TAGTIME

Thanks so much for all the excitement about TagTime!

Is there any way to run TagTime on Windows?

It *should* work with cygwin on windows, which is not to say it does…

You wrote: Alternatives to RescueTime is TimeAnalyzer. The correct reference would be Visual TimeAnalyzer from http://www.neuber.com/timeanalyzer. TimeAnalyzer is just a simply copycat, but more known in U.S.

That’s a cool idea and addresses exactly the problems I have with other time tracking tools.

However, I wonder one thing: How do you know how much time you spend using TagTime itself?

I assume that you don’t answer with “TagTime” when it pings you. Does it store the time you take answering the ping?

Ooh, yes, TagTime tracks exactly how long you spend using TagTime itself. It doesn’t even need any special accounting to do so. I just use the tag “ap” (for “answering pings”) and if a new ping pings while I’m answering the last one then the new ping is tagged “ap”. I have averaged just under 5 minutes per day answering pings over the last couple years.

Bethany spends quite a bit less than that because she puts less thought into her tag ontology. (For example, she can’t answer this question very well because she hasn’t been consistent in using a tag for “answering previous pings” :))

It seems that there is a missing parameter. Shouldn’t you need the number of pings matching the condition as well as the number of pings not matching the condition? I only see one of those parameters here (n, which I think refers to the former only).

Consider situation A: I have 1 week of pings, 10 of which for activity X. Situation B: I have 10 weeks of pings, 100 of which are for activity X. Surely both of these sample means will be the same but the confidence interval for B should be much smaller.

I am not strong in statistics so can’t really help beyond that, though.

Actually, I think you should model pings as independent Bernoulli trials. You have an activity that is happening with some probability p and tagtime occasionally takes a sample which is either a yes or a no. Given the total number of samples you took, and how many “yes” samples you saw, you want a confidence interval for p.

This is exactly the confidence interval for a binomial distribution, and there are many formulas to estimate this: http://en.wikipedia.org/wiki/Binomial_proportion_confidence_interval

I used the Wilson score interval with the example earlier in this thread: 13 out of 58 samples were “yes” samples, for a sample p of 13/58 = 0.224. Plugging that into wolfram alpha:

http://www.wolframalpha.com/input/?i=%7B+%281+/+%281%2Bz%5E2/n%29%29%28p+%2B+z%5E2/%282n%29+-+z*sqrt%28+p%281-p%29/n+%2B+z%5E2/%284n%5E2%29%29%29%2C+%281+/+%281%2Bz%5E2/n%29%29%28p+%2B+z%5E2/%282n%29+%2B+z*sqrt%28+p%281-p%29/n+%2B+z%5E2/%284n%5E2%29%29%29+%7D+where+n%3D58%2C+p%3D13/58%2C+z%3D1.96

I get a confidence interval for p of {.1359, .3466}. Multiply by (gap=0.75) * (n=58) for a confidence interval for time spent of {5.912, 15.077}.

Those other formulas for Poisson confidence interval seem to be related to sampling a Poisson variable for an estimate of its parameter lambda. But with tagtime, the only Poisson process is the one that decides “do I ping in this second or not?” and its parameter lambda is already known (it’s the “gap” setting).

In contrast, given that a ping already means tagtime has decided to ping (how’s that for tautological), the Poisson aspects are irrelevant. Your response to the ping is a Bernoulli sample of whether your desired tag is present or not. The unknown parameter is the true probability p of your tag being present at any given point in time. The Poisson properties only come into play when scaling p into real time (supposing that the true probability is actually such-and-such, and that I was sampling on average every 45 minutes, how much time was I really spending doing that activity?)

Forgot to mention, the z=1.96 in the Wolfram Alpha link is hard-coded normal-distribution-quantile for 95% confidence interval.

Aha! It’s not that there’s a missing parameter, it’s that I’m making a hidden assumption. I think it will be more clear with an extreme example:

Suppose my TagTime gap is 45 minutes, per usual, and I work for 45 minutes. Despite the googol-to-one odds, I manage to get pinged 100 times during that time. Half the pings are for activity X and half are for activity Y. So *given* the highly unlikely ping sequence, the best estimate of my time is 22.5 minutes on activity X and 22.5 minutes on activity Y.

That’s much more accurate that my formula above which will think I spent thousands of minutes on each activity (50 pings’ worth each).

BUT, if pings actually happen every 45 minutes — and in the long run, on average, they do — then both methods agree, and you don’t have to worry about the denominator.

So you’re quite right, but over the course of days and weeks it doesn’t matter, and TagTime is only accurate over days or weeks anyway, where the average gap between pings will be very close to 45 minutes.

So you might as well just treat each ping as representing 45 minutes for the tagged activity, regardless of how many pings didn’t match or how long you were tagging your time.

PS: You’re quite right (in your other comment below) that you can model this as many consecutive Bernoulli trials. Like every second there’s a tiny independent probability of a ping happening. The equations we’re using actually compute this for the limit where the Bernoulli trials happen continuously with infinitesimal probabilities — but still happen every 45 minutes on average.

I’m interested in installing this. I’m just wondering if anyone knows what the behaviour is if the computer is off.

It catches back up when the computer is turned on and automatically tags all the missing pings as “off” and “afk”.

Could someone help me figure out how to get my actual tagtime values to plot in beeminder? I have tagtime linked to abeeminder goal and it posts the tags themselves to the beeminder goal, however the value is still zero. Is this an easy fix you can point me to? Thanks.

Surprising! Best to email support@beeminder.com with a link to the Beeminder goal in question.

Hello,

We’ve recently launched our personal productivity software which currently has over 500 users. Although it’s still a beta version, Kiply allows you to track your activity in order to know how you really spend your time while using your computer.

With Kiply you automatically record your activity in real time and keep it completely private. You can view your activity either on the web or on your desktop app, as well as create new projects and see your progress according to your goals.

You can download Kiply beta version from our website http://www.kiply.com at no cost. Please share your thoughts about it! :)